Hi @Compresearcher,

Web scraping is a skill that I recommend you learn because a script that scrapes a website will (with almost 100% certainty) not work in a different context. You mentioned that you are a new to R and I wish you the best in your learning experience.

Also, you need to know that scraping forums can take a long long long loooooonnnggg time, especially forums with many threads and many pages. So I wrote my functions such that you can determine the exact pages that you want to scrape (explained a bit more below).

- Install the packages you don't have and execute the functions below:

library(rvest)

library(dplyr)

library(purrr)

library(stringr)

# ===============================================================

# HELPER FUNCTIONS

# ===============================================================

# Get thread info (thread title and url)

scrape_thread_info <- function(page_url){

html <- page_url %>%

read_html() %>%

html_nodes(css = ".icons+ a")

tibble(

title = html %>% html_text(),

url = html %>% html_attr(name = "href")

)

}

# Check if thread has a single page

has_single_page <- function(thread_url){

thread_url %>%

read_html() %>%

html_node(css = ".brace") %>%

html_text() %>%

is.na()

}

# Scrape posts

scrape_posts <- function(thread_link){

thread_link %>%

read_html() %>%

html_nodes(css = ".message_data") %>%

html_text() %>%

str_squish()

}

# ================================================================

# MAIN FUNCTION FOR SCRAPING

# ================================================================

scrape_gs_pages <- function(pages){

# Find number of pages in the forum and get the link for each page

page_1_url <- "https://www.gearslutz.com/board/mastering-forum/"

page_1_html <- read_html(page_1_url)

n_pages <- page_1_html %>%

html_nodes(css = ".inner_nav .smallfont") %>%

html_text() %>%

str_extract(pattern = "\\d+") %>%

as.numeric() %>%

max(na.rm = TRUE)

page_urls <- c(

page_1_url,

paste0("https://www.gearslutz.com/board/mastering-forum/index", 2:n_pages, ".html")

)[pages]

# Get threads info for the pages of interest

master <- map_dfr(page_urls, scrape_thread_info)

master %>%

mutate(

posts = url %>% map(scrape_posts)

)

}

- Use the main function by specifying the pages that you want to scrape. Pages are specified using a numeric vector. The example below will scrape pages 1 and 2:

forum_data <- scrape_gs_pages(pages = c(1, 2))

forum_data

# A tibble: 104 x 3

title url posts

<chr> <chr> <list>

1 Targeting Mastering Loudness for Streaming (LUFS, Spotify, YouTube)-Why ~ https://www.gearslutz.com/board/mastering-forum/1252522-targeting-mastering-loudness-streaming-lufs-spotify-y~ <chr [30~

2 Mastering Studio robbed - Stolen Gear List https://www.gearslutz.com/board/mastering-forum/1175879-mastering-studio-robbed-stolen-gear-list.html <chr [30~

3 New Sub-Forum https://www.gearslutz.com/board/mastering-forum/885955-new-sub-forum.html <chr [1]>

4 Mastering Forum Guidelines https://www.gearslutz.com/board/mastering-forum/150125-mastering-forum-guidelines.html <chr [1]>

5 Lynx Hilo still a good purchase in 2019? https://www.gearslutz.com/board/mastering-forum/1269153-lynx-hilo-still-good-purchase-2019-a.html <chr [30~

6 Leveller https://www.gearslutz.com/board/mastering-forum/1271943-leveller.html <chr [1]>

7 Opinions on using EQ Match or other similar tools in the mastering proce~ https://www.gearslutz.com/board/mastering-forum/1271922-opinions-using-eq-match-other-similar-tools-mastering~ <chr [1]>

8 About measurement methods, scales and their comparability https://www.gearslutz.com/board/mastering-forum/1271730-about-measurement-methods-scales-their-comparability.~ <chr [26~

9 Hendy Amps Michelangelo https://www.gearslutz.com/board/mastering-forum/987380-hendy-amps-michelangelo.html <chr [30~

10 Extreme Mastering or Crazy Daisy https://www.gearslutz.com/board/mastering-forum/199340-extreme-mastering-crazy-daisy.html <chr [6]>

# ... with 94 more rows

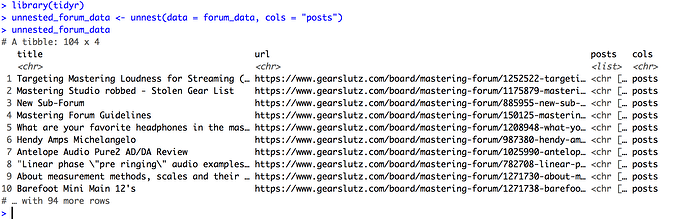

As you can see, the posts column in a list-column, so you will have to use the unnest function from the tidyr package to see the actual posts:

unnested_forum_data <- unnest(data = forum_data, cols = "posts")

unnested_forum_data

# A tibble: 1,992 x 3

title url posts

<chr> <chr> <chr>

1 Targeting Mastering Loudness for Streami~ https://www.gearslutz.com/board/mastering-forum/1252522-targe~ "Below I am sharing something that I send to my mastering clients when they inquire abou~

2 Targeting Mastering Loudness for Streami~ https://www.gearslutz.com/board/mastering-forum/1252522-targe~ "Hey Justin, Great post. For awhile I got on the bandwagon of multiple masters, one for ~

3 Targeting Mastering Loudness for Streami~ https://www.gearslutz.com/board/mastering-forum/1252522-targe~ Hey Christopher, Thanks! Yes, density has become a desired sound quality for sure. In an~

4 Targeting Mastering Loudness for Streami~ https://www.gearslutz.com/board/mastering-forum/1252522-targe~ You suggested one master which I love that idea or Would you recommend a 16bit/44.1 mast~

5 Targeting Mastering Loudness for Streami~ https://www.gearslutz.com/board/mastering-forum/1252522-targe~ Quote: Originally Posted by sdd17 You suggested one master which I love that idea or Wou~

6 Targeting Mastering Loudness for Streami~ https://www.gearslutz.com/board/mastering-forum/1252522-targe~ Delivering -14LUFS masters, unless specifically asked for, in any commercial genre, woul~

7 Targeting Mastering Loudness for Streami~ https://www.gearslutz.com/board/mastering-forum/1252522-targe~ Thanks for the post. Absolute truth. The one time I mastered to -14LUFS, the client comp~

8 Targeting Mastering Loudness for Streami~ https://www.gearslutz.com/board/mastering-forum/1252522-targe~ Quote: Originally Posted by Dave Polich I think we’re done with this stupidity regarding~

9 Targeting Mastering Loudness for Streami~ https://www.gearslutz.com/board/mastering-forum/1252522-targe~ "Quote: Originally Posted by Trakworx Below I am sharing something that I send to my mas~

10 Targeting Mastering Loudness for Streami~ https://www.gearslutz.com/board/mastering-forum/1252522-targe~ Quote: Originally Posted by Trakworx make one digital master that sounds good, is not ov~

# ... with 1,982 more rows

I hope this helps.