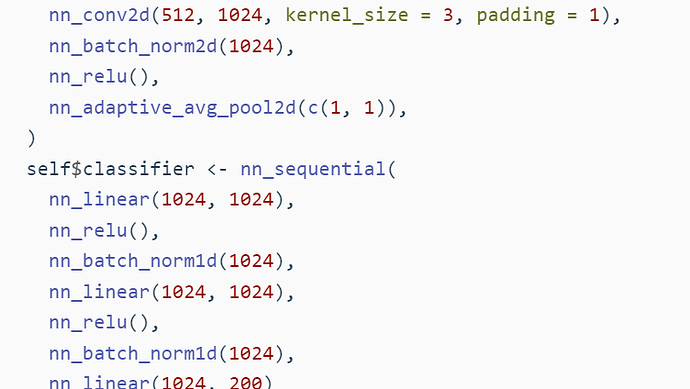

Hi, Guys, recently I read this book Deep Learning and Scientific Computing with R torch - 18 Image classification, take two: Improving performance.

I noticed that in the example code, some batch norm layers are after activation, some are before activation layer

is anybody who know why, I'm new to deep learning, thanks a lot