Hi, I am new to data science and hence just started to learn R. Is there an way to use gpu for plottin data either with just plain plot() or ggplot().

In my assignment I have given to work with a huge dataframe.

So any helpful answer will be appreciated.

My advice is to not attempt to print every coordinate you can extract from a huge dataframe, its not like that will result in useful information for the human eye to understand. Summarise your data in make an appropriate chart; one that will inform the viewer of some information.

The question in my assignment is Get scatterplot of "averageRating" and "numVotes" and interpret it carefully

where the dataframe is of 1300512 X 3 dimension

That's a trivial size for R to handle. No need for any GPU.

As Nir mentioned above you are likely to want to summarise the data anyway prior to plotting it. However, you could easily produce a scatter plot of over a million points if you wanted to - the problem would be in making sense of the plot.

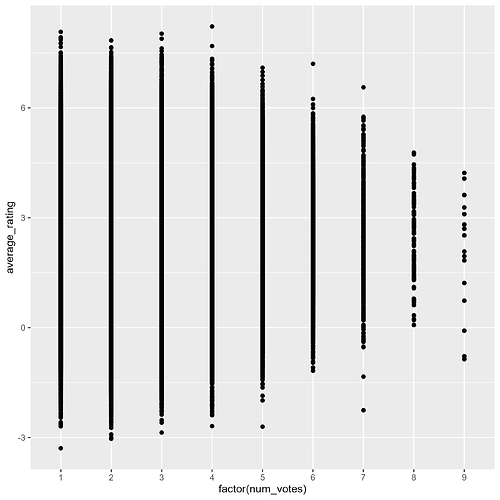

I simulated such a plot on my machine. I was able to produce it in about 60seconds, though admittedly I did it with ggsave onto disk, because Rstudio IDE viewer was not happy.

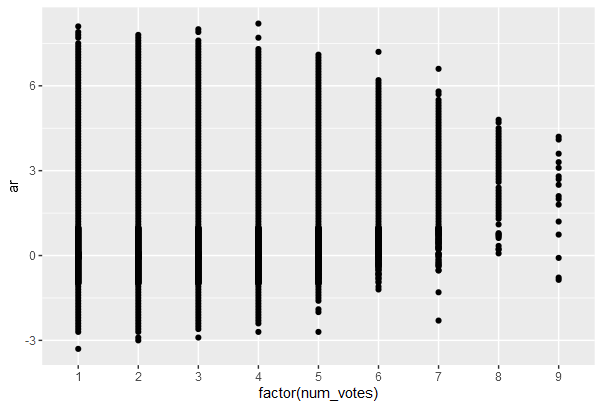

I also simulated reducing the ratings to two significant figures (rather than full precision that my random data generator gave me ) then I made the data distinct on average scores and number of votes. this was a much smaller dataset with about 3000 entries, but effectively the same visual info. it executed (along with the code to apply signif rounding and dedup) in .47seconds, and happily showed in my viewer.

The first image I got was :

the second :

This topic was automatically closed 42 days after the last reply. New replies are no longer allowed.

If you have a query related to it or one of the replies, start a new topic and refer back with a link.